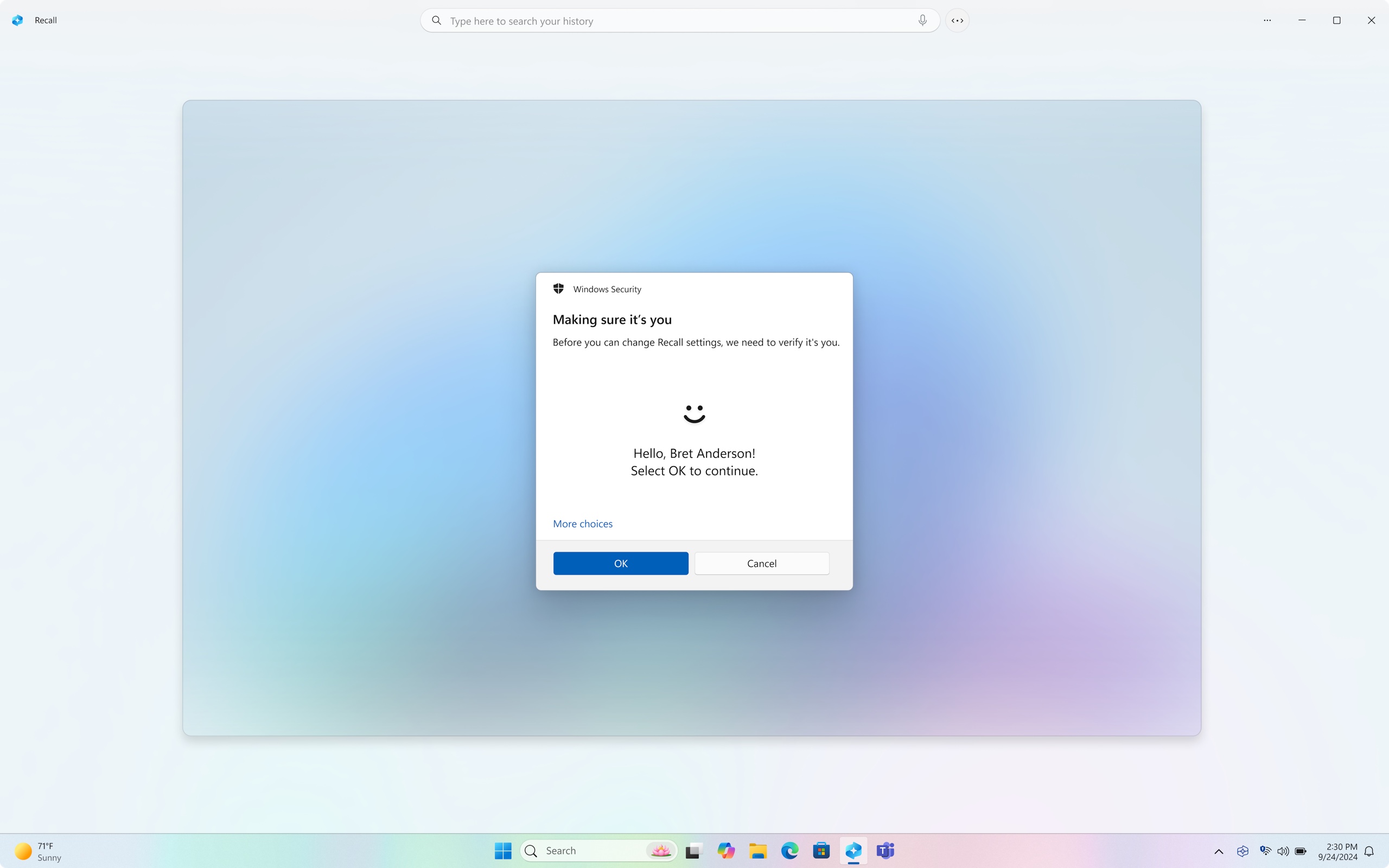

Users will be asked to reauthenticate with Windows Hello every time they access their Recall database.

Credit:

Microsoft

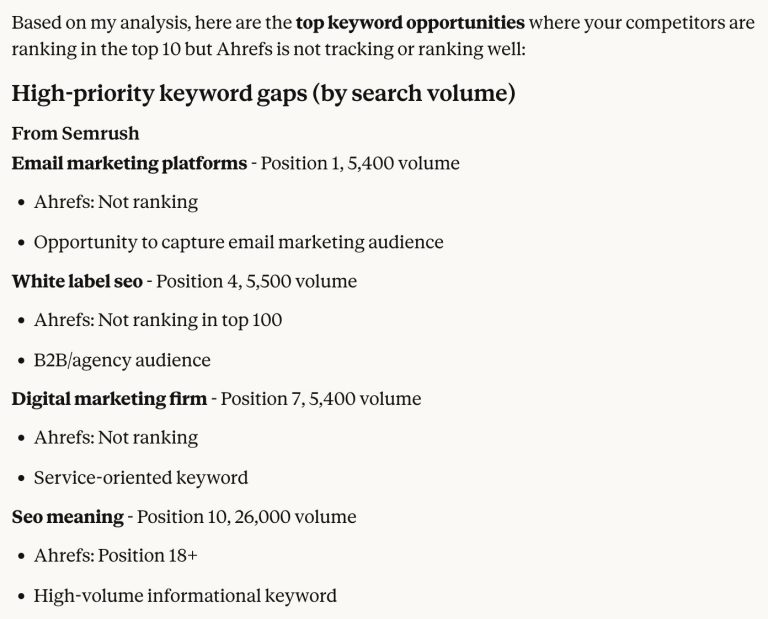

Microsoft has now delayed the feature multiple times to address those concerns, and it outlined multiple security-focused additions to Recall in a blog post in September. Among other changes, the feature is now opt-in by default and is protected by additional encryption. Users must also re-authenticate with Windows Hello each time they access the database. Turning on the feature requires Secure Boot, BitLocker disk encryption, and Windows Hello to be enabled. In addition to the manual exclusion lists for sites and apps, the new Recall also attempts to mask sensitive data like passwords and credit card numbers so they aren’t stored in the Recall database.

The new version of Recall can also be completely uninstalled for users who have no interest in it, or by IT administrators who don’t want to risk it exposing sensitive data.

Testers will need to kick the tires on all of these changes to make sure that they meaningfully address all the risks and issues that the original version of Recall had, and this Windows Insider preview is their chance to do it.

“Do security”

Part of the original Recall controversy was that Microsoft wasn’t going to run it through the usual Windows Insider process—it was intended to be launched directly to users of the new Copilot+ PCs via a day-one software update. This in itself was a big red flag; usually, even features as small as spellcheck for the Notepad app go through multiple weeks of Windows Insider testing before Microsoft releases them to the public. This gives the company a chance to fix bugs, collect and address user feedback, and even scrub new features altogether.

Microsoft is supposedly re-orienting itself to put security over all other initiatives and features. CEO Satya Nadella recently urged employees to “do security” when presented with the option to either launch something quickly or launch something securely. In Recall’s case, the company’s rush to embrace generative AI features almost won out over that “do security” mandate. If future AI features go through the typical Windows Insider testing process first, that will be a sign that Microsoft is taking its commitment to security seriously.